I often noticed default values for database file autogrow settings during my audits. You don’t trust me? Let me talk about a funny story about an incredible (and extreme) situation that concerns a transaction log file and its default settings.

First of all, during my intervention, my customer complained of some performance issues, so I decided to take a look at different areas of its instance.

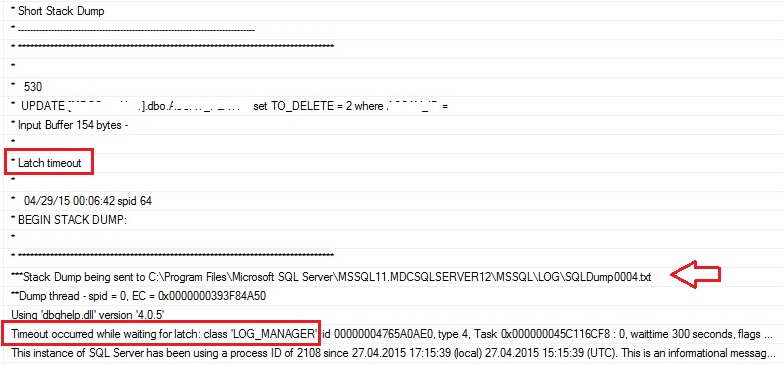

I found some interesting records inside the SQL Server error log file as follows:

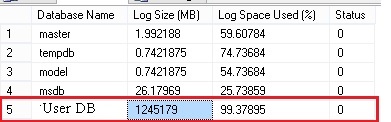

A timeout that occurred while waiting for latch class LOG_MANAGER … Ouch, it was the first time I faced this issue and after taking a look at the latch stats it confirmed that an issue existed on this area:

![]()

Unfortunately, the Microsoft documentation is very poor concerning the description of this latch type.

![]()

But Paul Randal gives some clues on his very useful blog here. This latch may appear when transaction log file is growing (whatever the reason). After some investigations, I effectively found that the growing issue was due to an incorrect recovery model configuration (FULL and no backup log in this context).

But that’s not all and this is where the funny part of this story comes. To be honest, I have never seen a latch timeout causing by a growing of a transaction log file except on this situation. I first suspected the storage here but in fact after taking a look at the transaction log file size and the associated growth parameter, I definitely rule out this suspicion (at least the real problem was not here).

…

![]()

I replaced the real database name of my customer by User DB here. So what do you notice? No you don’t dream … the transaction log file size = 1.2 TB with a default auto growth configuration = 10%! So I imagine that you begin to understand where the problem is located 🙂

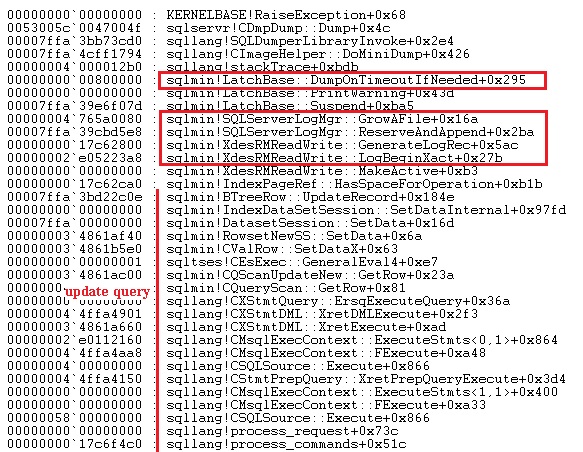

So, just for curiosity let’s have a look at the generated dump file:

So, it seems that an update query has generated log records after updating a BTree structure (sqlmin!BTreeRow:UpdateRecord()). In turn, the log manager tried to expand the transaction log file (sqlmin!SQLServerLogMgr::GrowAFile() method) without success after SQL Server has generated log records (sqlmin!XdesRMReadWrite::LogBeginXact() –> sqlmin!XdesRMReadWrite:GenerateLogRec() methods) . In this context, I guess that the expand operation has taken a very long time because 10% of the transaction log file represents a large chunk of disk space (roughly 100GB).

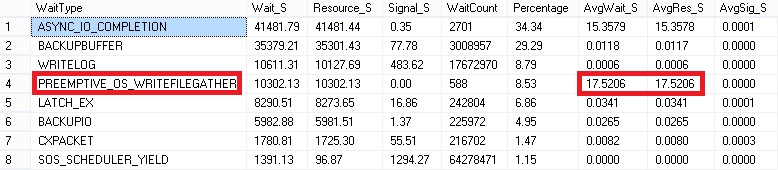

Finally let’s have a look at the wait stats:

We can also correlate the PREEMPTIVE_OS_WRITEFILEGATHER wait type to our issue here with the high average value. Zeroing the new portion of the transaction log file may be very long in our context.

My intention was not to blame my customer here but it was a good opportunity to point out the potential issue you may face with default database file auto growth settings!

See you

By David Barbarin

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/12/microsoft-square.png)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/OLS_web-min-scaled.jpg)