By Franck Pachot

.

How to migrate from non-CDB to CDB? Of course all known migration methods works. But there is also another solution: upgrade to 12c if necessary and then convert the non-CDB to a PDB. This is done with the noncdb_to_pdb.sql which converts a non-CDB dictionary to a PDB one, with metadata and object links. But do you get a clean PDB after that ? I tested it and compared the result with same database migrated by transportable tablespaces.

The test case

In 12c I can use Full Transportable database, but here I’ve only one tablespace as I’m doing my comparison on an empty database with the EXAMPLE schemas.

Here is my database:

RMAN> report schema; Report of database schema for database with db_unique_name NDB1 List of Permanent Datafiles =========================== File Size(MB) Tablespace RB segs Datafile Name ---- -------- -------------------- ------- ------------------------ 1 790 SYSTEM YES /u01/app/oracle/oradata/NDB1/system01.dbf 3 610 SYSAUX NO /u01/app/oracle/oradata/NDB1/sysaux01.dbf 4 275 UNDOTBS1 YES /u01/app/oracle/oradata/NDB1/undotbs01.dbf 5 1243 EXAMPLE NO /u01/app/oracle/oradata/NDB1/example01.dbf 6 5 USERS NO /u01/app/oracle/oradata/NDB1/users01.dbf

It’s a new database, created with dbca, all defaults, and having only the EXAMPLE tablespace. SYSTEM is 790MB and SYSAUX is 610MB. We can have a lot of small databases like that, where system size is larger than user size and this is a reason to go to multitenant.

I will compare the following:

- the migration with transportable tablespaces (into pluggable database PDB_TTS)

- the plug and run noncdb_to_pdb (into the pluggable database PDB_PLG)

Transportable tablespace

Transportable tablespace will plug only the non system tablespaces and all the dictionary entries are recreated while importing metadata. Here it is:

SQL> alter tablespace EXAMPLE read only; Tablespace altered. SQL> host expdp '"/ as sysdba"' transport_tablespaces='EXAMPLE'

The log gives me the dump file (containing the metadata) and the datafiles to copy:

Master table "SYS"."SYS_EXPORT_TRANSPORTABLE_01" successfully loaded/unloaded ****************************************************************************** Dump file set for SYS.SYS_EXPORT_TRANSPORTABLE_01 is: /u01/app/oracle/admin/NDB1/dpdump/expdat.dmp ****************************************************************************** Datafiles required for transportable tablespace EXAMPLE: /u01/app/oracle/oradata/NDB1/example01.dbf Job "SYS"."SYS_EXPORT_TRANSPORTABLE_01" successfully completed at ... elapsed 00:03:55

then on the destination I create an empty pluggable database:

SQL> create pluggable database PDB_TTS admin user pdbadmin identified by "oracle" file_name_convert=('/pdbseed/','/PDB_TTS/');

SQL> alter pluggable database PDB_TTS open;

SQL> alter session set container=PDB_TTS;

and import the metadata after having copied the datafile to /u03:

SQL> create or replace directory DATA_PUMP_DIR_NDB1 as '/u01/app/oracle/admin/NDB1/dpdump'; SQL> host impdp '"sys/oracle@//vm211:1666/pdb_tts as sysdba"' transport_datafiles=/u03/app/oracle/oradata/PDB_TTS/example01.dbf directory=DATA_PUMP_DIR_NDB1

which took only two minutes because I don’t have a lot of objects. That’s all. I have a brand new pluggable database where I’ve imported my tablespaces.

Here I used the transportable tablespace and had to pre-create the users. But in 12c you can do everything with Full Tabsportable Database.

noncdb_to_pdb.sql

The other solution is to plug the whole database, including the SYSTEM and SYSAUX tablespaces, and then run the noncdb_to_pdb.sql script to transform the dictionary to a multitenant one. First, we generate the xml describing the database, which is similar to the one generated when we unplug a PDB:

SQL> shutdown immediate

SQL> startup open read only;

SQL> exec dbms_pdb.describe('/tmp/NDB01.xml');

And then plug it:

SQL> CREATE PLUGGABLE DATABASE PDB_PLG USING '/tmp/NDB01.xml' COPY FILE_NAME_CONVERT = ('/u01/app/oracle/oradata/NDB1', '/u03/app/oracle/oradata/PDB_PLG');

At that point I can open the PDB but it will act as a Non-CDB, with its own dictionary that is not linked to the root. For example, you have nothing when you query DBA_PDBS from the PDB:

SQL> show con_id CON_ID ------------------------------ 6 SQL> select * from dba_pdbs; no rows selected

I put in my todo list to test what we can do in that PDB which is not yet a PDB before raising lot of ORA-600.

Now you have to migrate the dictionary to a PDB one. The noncdb_to_pdb.sql will do internal updates to transform the entries in OBJ$ to be metadata links.

SQL> alter session set container=PDB_PLG; SQL> @?/rdbms/admin/noncdb_to_pdb; SQL> alter pluggable database PDB_PLG open;

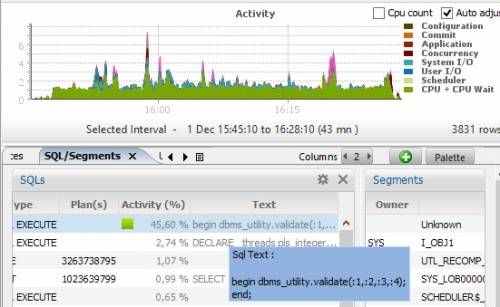

The updates will depend on the number of dictionary objects, so that is fixed for the version. And the remaining time is to recompile all objects, but that can be done in parallel. Here, I’ve run it in serial to see how long it takes (screenshot from Lighty):

Comparison

My goal was to compare both methods. As I expected, the SYSTEM and SYSAUX tablespaces did not decrease when using the noncdb_to_pdb, so if you want to go to multitenant to save space, the noncdb_to_pdb method is not the good one:

RMAN> report schema; using target database control file instead of recovery catalog Report of database schema for database with db_unique_name CDB1_SITE1 List of Permanent Datafiles =========================== File Size(MB) Tablespace RB segs Datafile Name ---- -------- -------------------- ------- ------------------------ 1 781 SYSTEM YES /u02/app/oracle/oradata/cdb1_site1/system01.dbf 3 691 SYSAUX NO /u02/app/oracle/oradata/cdb1_site1/sysaux01.dbf 4 870 UNDOTBS1 YES /u02/app/oracle/oradata/cdb1_site1/undotbs01.dbf 5 260 PDB$SEED:SYSTEM NO /u02/app/oracle/oradata/cdb1_site1/pdbseed/system01.dbf 6 5 USERS NO /u02/app/oracle/oradata/cdb1_site1/users01.dbf 7 570 PDB$SEED:SYSAUX NO /u02/app/oracle/oradata/cdb1_site1/pdbseed/sysaux01.dbf 8 260 PDB1:SYSTEM NO /u02/app/oracle/oradata/cdb1_site1/pdb1/system01.dbf 9 580 PDB1:SYSAUX NO /u02/app/oracle/oradata/cdb1_site1/pdb1/sysaux01.dbf 10 10 PDB1:USERS NO /u02/app/oracle/oradata/cdb1_site1/pdb1/pdb1_users01.dbf 14 270 PDB_TTS:SYSTEM NO /u02/app/oracle/oradata/cdb1_site1/PDB_TTS/system01.dbf 15 590 PDB_TTS:SYSAUX NO /u02/app/oracle/oradata/cdb1_site1/PDB_TTS/sysaux01.dbf 17 1243 PDB_TTS:EXAMPLE NO /u03/app/oracle/oradata/PDB_TTS/example01.dbf 22 790 PDB_PLG:SYSTEM NO /u03/app/oracle/oradata/PDB_PLG/system01.dbf 23 680 PDB_PLG:SYSAUX NO /u03/app/oracle/oradata/PDB_PLG/sysaux01.dbf 24 5 PDB_PLG:USERS NO /u03/app/oracle/oradata/PDB_PLG/users01.dbf 25 1243 PDB_PLG:EXAMPLE NO /u03/app/oracle/oradata/PDB_PLG/example01.dbf

The SYSTEM tablespace which is supposed to contain only links (my user schemas don’t have a lot of objects) is the same size as the root. This is bad. Let’s look at the detail:

SQL> select *

from (select nvl(pdb_name,'CDB$ROOT') pdb_name,owner,segment_type,bytes from cdb_segments

left outer join dba_pdbs using(con_id))

pivot (sum(bytes/1024/1024) as "MB" for (pdb_name)

in ('CDB$ROOT' as "CDB$ROOT",'PDB_TTS' as PDB_TTS,'PDB_PLG' as PDB_PLG))

order by greatest(nvl(PDB_TTS_MB,0),nvl(PDB_PLG_MB,0))-least(nvl(PDB_TTS_MB,0),nvl(PDB_PLG_MB,0))

desc fetch first 20 rows only;

OWNER SEGMENT_TYPE CDB$ROOT_MB PDB_TTS_MB PDB_PLG_MB

-------------------- ------------------ ----------- ---------- ----------

SYS TABLE 539 96 540

SYS INDEX 187 109 195

SYS LOBSEGMENT 117 105 118

SYS TABLE PARTITION 17 1 12

SYSTEM INDEX 10 1 10

SYS SYSTEM STATISTICS 8

SYSTEM TABLE 8 1 8

SYS LOBINDEX 12 7 13

SYS INDEX PARTITION 9 0 6

SYSTEM LOBSEGMENT 5 0 5

APEX_040200 LOBSEGMENT 80 74 80

SYSTEM INDEX PARTITION 4 4

SYSTEM TABLE PARTITION 3 3

SYS TABLE SUBPARTITION 2 2

SYS CLUSTER 52 50 52

SYS LOB PARTITION 3 1 2

SYSTEM LOBINDEX 2 0 2

APEX_040200 TABLE 100 99 100

XDB TABLE 7 6 7

AUDSYS LOB PARTITION 1 0 1

20 rows selected.

Here I’ve compared the dictionary sizes. While the PDB_TTS table segments are below 100MB, the PDB_PLG is the same size as the root. The noncdb_to_pdb has updated OBJ$ but did not delete the rows reclaim space from other tables (see update 2).

Which tables?

SQL> select *

from (select nvl(pdb_name,'CDB$ROOT') pdb_name,owner,segment_type,segment_name,bytes

from cdb_segments left outer join dba_pdbs using(con_id)

where owner='SYS' and segment_type in ('TABLE'))

pivot (sum(bytes/1024/1024) as "MB" for (pdb_name)

in ('CDB$ROOT' as "CDB$ROOT",'PDB_TTS' as PDB_TTS,'PDB_PLG' as PDB_PLG))

order by greatest(nvl(PDB_TTS_MB,0),nvl(PDB_PLG_MB,0))-least(nvl(PDB_TTS_MB,0),nvl(PDB_PLG_MB,0))

desc fetch first 20 rows only;

OWNER SEGMENT_TYPE SEGMENT_NAME CDB$ROOT_MB PDB_TTS_MB PDB_PLG_MB

----------------- ------------------ ------------------------------ ----------- ---------- ----------

SYS TABLE IDL_UB1$ 288 3 288

SYS TABLE SOURCE$ 51 2 52

SYS TABLE IDL_UB2$ 32 13 32

SYS TABLE ARGUMENT$ 13 0 13

SYS TABLE IDL_CHAR$ 11 3 11

The IDL_UB1$ is the table that contains all the pcode for pl/sql. All those wrapped dbms_ packages are there. And we don’t need them in the PDB: we have link to the root which has exactly the same version.

Conclusion

My conclusion is that I’ll not advise to use using noncdb_to_pdb. First, that script doing a lot of internal stuff scares me. I prefer to start that new implementation of the dictionary with a clean one.

But now that I made this test, I’ve two additional reasons to avoid it. First, it’s not faster – except if you have a lot of objects. And the main goal is to reduce the total space by having the oracle packages stored only once. And this is cleary not done by the noncdb_to_pdb.

However, that conclusion is only for small databases. If you a database with a huge number of objects and pl/sql packages, then the overhead to keep the dictionary objects will not be very significant. And the TSS solution will be longer because it has to import all metadata. So there is still a case for noncdb_to_pdb. But test is before. And be sure to have a large shared pool for the recompile step.

Update 1: I forgot to add another reason to be very careful with noncdb_to_pdb from Bertrand Drouvot in his post about optimizer_adaptive_features huge negative impact on it.

Update 2: From a comment on OTN forum I changed the sentence about deleted rows because it was wrong. In fact, rows are deleted when the objects are recompiled:

SQL> select name,count(*) from containers(IDL_UB1$) left outer join v$containers using(con_id) group by name order by 1; NAME COUNT(*) ------------------------------ ---------- CDB$ROOT 53298 PDB1 6457 PDB_PLG 6432 PDB_TTS 6354 SQL> select name,count(*) from containers(SOURCE$) left outer join v$containers using(con_id) group by name order by 1; NAME COUNT(*) ------------------------------ ---------- CDB$ROOT 327589 PDB1 73055 PDB_PLG 20306 PDB_TTS 17753

The issue is only that space is still allocated. And you can’t SHRINK those objects because SYSTEM is DMT, and anyway the large tables contain LONG, and finally:

SQL> alter table sys.IDL_UB1$ shrink space; alter table sys.IDL_UB1$ shrink space * ERROR at line 1: ORA-65040: operation not allowed from within a pluggable database

Of course, the space can be reused, but do you expect to add 200MB of compiled pl/sql in future releases?

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/12/oracle-square.png)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/DWE_web-min-scaled.jpg)