By Mouhamadou Diaw

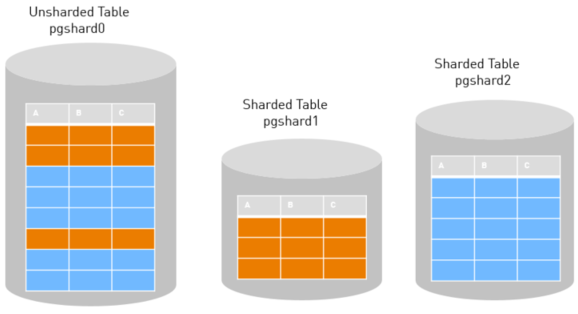

In this article we are going to talk about sharding in PostgreSQL. What is sharding, Sharding is like partitioning. The difference is that with traditional partioning, partitions are stored in the same database while sharding shards (partitions) are stored in different servers.

Below is an example of sharding configuration we will use for our demonstration

PostgreSQL does not provide built-in tool for sharding. We will use citus which extends PostgreSQL capability to do sharding and replication.

We will use 3 servers

pgshard0: 192.168.1.50

pgshard1: 192.168.1.51

pgshard2: 192.168.1.52

First let’s install PostgreSQL + citus extension on all servers (pgshard0, pgshard1, pgshard2). We suppose of course that network is configured so that all server can communicate

[root@pgshard0 ~]# yum install -y https://download.postgresql.org/pub/repos/yum/9.5/redhat/rhel-7-x86_64/pgdg-oraclelinux95-9.5-2.noarch.rpm

[root@pgshard0 ~]# yum install -y citus_95.x86_64

Now let’s to initialize a new database system on all servers (pgshard0, pgshard1, pgshard2)

[root@pgshard0 ~]# /usr/pgsql-9.5/bin/postgresql95-setup initdb

Initializing database ... OK

To load citus extension we have to edit the /var/lib/pgsql/9.5/data/postgresql.conf file and add the following line

shared_preload_libraries = ‘citus’

[root@pgshard0 data]# grep shared_preload_libraries /var/lib/pgsql/9.5/data/postgresql.conf

#shared_preload_libraries = '' # (change requires restart)

shared_preload_libraries = 'citus'

[root@pgshard0 data]#

To allow postgreSQL connection between servers we have to configure two configuration files /var/lib/pgsql/9.5/data/postgresql.conf and /var/lib/pgsql/9.5/data/pg_hba.conf

[root@pgshard0 data]# grep listen postgresql.conf

listen_addresses = '*' # what IP address(es) to listen on;

[root@pgshard0 data]#

[root@pgshard0 data]# grep trust pg_hba.conf

# METHOD can be "trust", "reject", "md5", "password", "gss", "sspi",

host all all 127.0.0.1/32 trust

host all all 192.168.1.0/24 trust

host all all ::1/128 trust

[root@pgshard0 data]#

Let’s now start database on all servers (pgshard0, pgshard1, pgshard2)

[root@pgshard0 data]# service postgresql-9.5 start

Redirecting to /bin/systemctl start postgresql-9.5.service

[root@pgshard0 data]#

We need to inform the master (pgshard0) about its workers (pgshard1 and pgshard2). For this we have to create a configuration file pg_worker_list.conf like this

[root@pgshard0 data]# pwd

/var/lib/pgsql/9.5/data

[root@pgshard0 data]# cat pg_worker_list.conf

pgshard1 5432

pgshard2 5432

[root@pgshard0 data]#

Let’s now reload the master pgshard0 to take into account the modification

[root@pgshard0 ~]# service postgresql-9.5 reload

Redirecting to /bin/systemctl reload postgresql-9.5.service

[root@pgshard0 ~]#

Very important: we must create citus extension on both servers

postgres=# create extension citus;

CREATE EXTENSION

postgres=# \dx

List of installed extensions

Name | Version | Schema | Description

---------+---------+------------+------------------------------

citus | 5.2-1 | pg_catalog | Citus distributed database

plpgsql | 1.0 | pg_catalog | PL/pgSQL procedural language

(2 rows)

The last step before the sharding is now to verify that the master is ready. This SELECT command we will run in the psql shell should output the worker nodes mentioned in the pg_worker_list.conf file.

postgres=# SELECT * FROM master_get_active_worker_nodes();

node_name | node_port

-----------+-----------

pgshard2 | 5432

pgshard1 | 5432

(2 rows)

postgres=#

Every thing is going fine until now, so we can create on the master the table to be sharded. Let’s say table sales

postgres=# CREATE TABLE sales

postgres-# (deptno int not null,

postgres(# deptname varchar(20),

postgres(# total_amount int,

postgres(# CONSTRAINT pk_sales PRIMARY KEY (deptno)) ;

CREATE TABLE

We need have inform Citus that data of table sales will be distributed among pghard1 and pgshard2. In our case we choose a hash distribution.

postgres=# SELECT master_create_distributed_table('sales', 'deptno', 'hash');

master_create_distributed_table

---------------------------------

(1 row)

In our example we are going to create one shard on each worker. We will Specify

the table name : sales

total shard count : 2

replication factor : 1 –No replication

postgres=# SELECT master_create_worker_shards('sales', 2, 1);

master_create_worker_shards

-----------------------------

(1 row)

postgres=#

And guys it’s all. Sharding is done. Connecting to pgshard1 and pgshard2 we can verify that shards were created with the same structure than the base table.

[postgres@pgshard1 bin]$ psql -c "\dt"

List of relations

Schema | Name | Type | Owner

--------+--------------+-------+----------

public | sales_102026 | table | postgres

(1 row)

[postgres@pgshard2 ~]$ psql -c "\dt"

List of relations

Schema | Name | Type | Owner

--------+--------------+-------+----------

public | sales_102027 | table | postgres

(1 row)

Now let’s insert some rows in the table from the master

postgres=# insert into sales (deptno,deptname,total_amount) values (1,'french_dept',10000);

INSERT 0 1

postgres=# insert into sales (deptno,deptname,total_amount) values (2,'german_dept',15000);

INSERT 0 1

postgres=# insert into sales (deptno,deptname,total_amount) values (3,'china_dept',21000);

INSERT 0 1

postgres=# insert into sales (deptno,deptname,total_amount) values (4,'gambia_dept',8750);

INSERT 0 1

postgres=# insert into sales (deptno,deptname,total_amount) values (5,'japan_dept',12010);

INSERT 0 1

postgres=# insert into sales (deptno,deptname,total_amount) values (6,'china_dept',35000);

INSERT 0 1

postgres=# insert into sales (deptno,deptname,total_amount) values (7,'nigeria_dept',10000);

INSERT 0 1

postgres=# insert into sales (deptno,deptname,total_amount) values (8,'senegal_dept',33000);

INSERT 0 1

postgres=#

We can see that data are distributed between pgshard1 and pgshard2

[postgres@pgshard1 bin]$ ./psql

psql (9.5.4)

Type "help" for help.

postgres=# select * from sales_102026;

deptno | deptname | total_amount

--------+--------------+--------------

1 | french_dept | 10000

3 | india_dept | 21000

4 | gambia_dept | 8750

5 | japan_dept | 12010

7 | nigeria_dept | 10000

8 | senegal_dept | 33000

(6 rows)

[postgres@pgshard2 bin]$ ./psql

psql (9.5.4)

Type "help" for help.

postgres=# select * from sales_102027;

deptno | deptname | total_amount

--------+-------------+--------------

2 | german_dept | 15000

6 | china_dept | 35000

(2 rows)

If we do an explain from the master pgshard0, we note that query is routed to different shard depending of data

postgres=# explain verbose select * from sales where deptno=5;

QUERY PLAN

--------------------------------------------------------------------------------------------------------------

Distributed Query

Executor: Router

Task Count: 1

Tasks Shown: All

-> Task

Node: host=pgshard1 port=5432 dbname=postgres

-> Index Scan using pk_sales_102026 on public.sales_102026 sales (cost=0.15..8.17 rows=1 width=66)

Output: deptno, deptname, total_amount

Index Cond: (sales.deptno = 5)

(9 rows)

postgres=# explain verbose select * from sales where deptno=6;

QUERY PLAN

--------------------------------------------------------------------------------------------------------------

Distributed Query

Executor: Router

Task Count: 1

Tasks Shown: All

-> Task

Node: host=pgshard2 port=5432 dbname=postgres

-> Index Scan using pk_sales_102027 on public.sales_102027 sales (cost=0.15..8.17 rows=1 width=66)

Output: deptno, deptname, total_amount

Index Cond: (sales.deptno = 6)

(9 rows)

Conclusion:

In this article we show that PostgreSQL allows to do many interesting things. Use of extensions can considerably extend PostgreSQL capability

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/12/oracle-square.png)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/DWE_web-min-scaled.jpg)