I think you already have read the interesting series of blog posts of my colleagues Franck and Daniel about inserting on 1 million rows for Oracle and PostGreSQL. So it’s time to write the first of the same series concerning SQL Server. First of all, just to clarify, the idea is not to make a direct comparison between Oracle, PostGreSQL and SQL Server but just to see variations that exist for each of them to insert quickly 1 million rows.

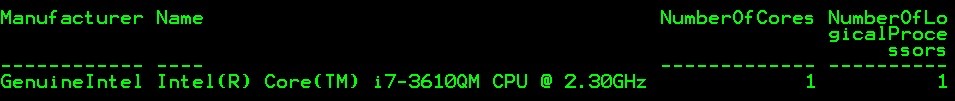

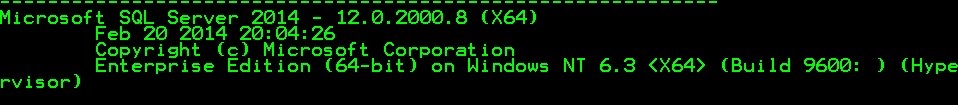

So I will perform the same basic test that my colleagues with roughly the same environment, one virtual machine on Hyper-V including only one processor, 512MB of memory and one SQL Server 2014 instance enterprise edition capped to 512 MB of memory:

Get-WmiObject –Class Win32_processor | ft Manufacturer, Name, NumberOfCores, NumberOfLogicalProcessors –Autosize

SELECT @@VERSION

SELECT

name,

value_in_use

FROM sys.configurations

WHERE name = 'max server memory (MB)'

![]()

Row-by-row method

Let’s start by using the same test tables with one heap table, clustered table and the same kind of script as well. I just modified the original script written by Franck but translating PL-SQL in T-SQL implies often using a completely different syntax but anyway, we will produce roughly the same bunch of data.

My user objects are stored to an user database called DEMO for this first test:

if object_id('DEMO', 'U') is not null

drop table DEMO;

create table DEMO("id" int , "text" varchar(15), "number" int);

if object_id('DEMO_PK', 'U') is not null

drop table DEMO_PK;

create table DEMO_PK("id" int , "text" varchar(15), "number" int,

constraint demo_pk_pk primary key (id) );

…

DECLARE @i INT = 1;

WHILE @i <= 1000000

BEGIN

INSERT INTO DEMO VALUES (@i, CASE ROUND(RAND() * 10, 0) WHEN 1 THEN 'Marc' WHEN 2 THEN'Bill' WHEN 3 THEN 'George' WHEN 4 THEN 'Eliot' WHEN 5 THEN 'Matt' WHEN 6 THEN 'Trey' ELSE'Tracy' END, RAND() * 10000);

SET @i += 1;

END

Here my first result for both tables:

— 00:02:29 – Heap table

— 00:02:25 – table with clustered index

There are no big differences between inserting data into a heap table and a clustered table in this scenario because we insert rows basically in the same manner (always in the last page). At this point it is important to keep in mind that by default SQL Server uses implicit transaction mode. It means that each insert statement represents a transaction which has to be commited to the transaction log.

If we take a look at the specific wait statistics we can expect that the most waits will concern the log writes activity.

![]()

That’s it! The average values are pretty low but our results are far away from those of my colleagues. Let’s motivated and let’s talk about a kind of workaroud to speed-up the insert query. In fact, putting the user objects on tempdb database might be a kind of workaround. The main drawback is that tempdb database is temporary by design. Thus, our user objects will be persisted until the restart of the SQL Server but let’s perform the previous test on tempdb.

Here the results I get from this test:

— 00:00:16 – Heap table

— 00:00:15 – table with clustered index

So, a big improvement here. Furthermore we may notice that related wait statistics have also changed as follows:

![]()

This main wait type is just related to a sustained CPU usage … so our final result is not so bad. At this point we may wonder why putting user objects on tempdb database increases the global procedure? In fact, we’re using the special logging mechanism used by tempdb database that includes the lazy commit feature and the nonlogged after image feature for insert and update statements.

Go back to the user database DEMO and let’s finish this row-by-row section by inserting data in an single transaction (or explicit mode) and let’s take a look at the following results:

begin transaction

declare @i int = 1;

while @i <= 1000000

begin

insert DEMO values (@i, case cast(rand() * 10 as tinyint) when 1 then 'Marc' when 2 then'Bill' when 3 then 'George' when 4 then 'Eliot' when 5 then 'Matt' when 6 then 'Trey'

when 7 then 'Tracy' when 8 then'Greg' when 9 then 'Steve' else 'Patricia' end, rand() * 1000)

set @i = @i + 1;

end

commit transaction

— 00:00:10 – Heap table

— 00:00:09 – table with clustered index

As excepted, we may notice a drastic drop of the duration value of both tests.

Bulk-insert method

Row-by-row commit is not the strength of SQL Server becauseeach commit requires to flush data to the transaction log. So let’s switch to bulk insert mode now. There are several ways to bulk insert data with SQL Server (either from client or server side by using for instance bcp or SSIS tool, BULK INSERT, SELECT INTO or OPENROWSET command and so on). In this test, I will use bcp to export data to a file before importing this file to my two tables.

To export my data I used the bcp command as follows:

![]()

…

![]()

I used native data types (-n option) in this case because my test concerns only transferring data from and to SQL Server. This option can improve performance but to be honest with this bunch of data the difference is not relevant.

Let’s bulk import our data to the two tables DEMO and DEMO_PK in my user database (not tempdb this time). At this point I want to be sure to be more efficient and I will use minimal logging for bulk-import operations in order to reduce the possibility to fill the log space and the potential contention (as a reminder writing to the transaction log file is always synchronous by default). Moreover, don’t forget that in this mode writing to the data file switches from asynchronous to synchronous mode. So becareful about your storage performance to avoid facing some unexpected behaviours during your import process.

So for my tests, the database DEMO is configured to SIMPLE recovery model and I will use BUKL INSERT command with TABLOCK option (which is a requirement to use minimally logging). Using options is possible but after some testing they appear to be not helpful in this context.

Concerning my heap table:

bulk insert demo.dbo.DEMO

from 'C:bcpDEMO.dat'

with

(

DATAFILETYPE = 'native',

TABLOCK

)

SQL Server Execution Times:

CPU time = 703 ms, elapsed time = 725 ms.

Concerning my clustered table:

bulk insert demo.dbo.DEMO_PK

from 'C:bcpDEMO_PK.dat'

with

(

DATAFILETYPE = 'native',

ORDER (id ASC),

TABLOCK

)

SQL Server Execution Times:

CPU time = 1437 ms, elapsed time = 1489 ms.

A little bit higher execution time than bulk import to a heap table. My table with a clustered index seems to introduce some overheads.

The bottom line is pretty the same than my colleagues. Insert and committing data rows by rows is not an optimized way if you plan to import a lot of data. So let’s continue on the same way than my colleagues with the next post of this series until the famous In-Memory feature. Don’t forget that if you are in Switzerland in June, our experts from Oracle, Microsoft and SAP technologies will talk about In-Memory as implemented by SQL Server, Oracle and SAP HANA. All the same day. It’s free and you can register now: Event In-Memory: boost your IT performance

By David Barbarin

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/12/microsoft-square.png)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/NME_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2024/01/HME_web.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/10/STS_web-min-scaled.jpg)