In PostgreSQL when you create an index on a table, sessions that want to write to the table must wait until the index build completed by default. There is a way around that, though, and in this post we’ll look at how you can avoid that.

As usual we’ll start with a little table:

postgres=# \! cat a.sql drop table if exists t1; create table t1 ( a int, b varchar(50)); insert into t1 select a.*, md5(a::varchar) from generate_series(1,5000000) a; postgres=# \i a.sql DROP TABLE CREATE TABLE INSERT 0 5000000

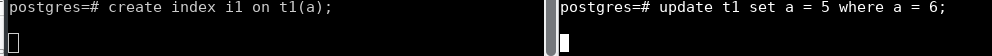

When you now create an index on that table and try to write the table at the same time from a different session that session will wait until the index is there (the screenshot shows the first session creating the index on the left and the second session doing the update on the right, which is waiting for the left one):

For production environments this not something you want to happen as this can block a lot of other sessions especially when the table in question is heavily used. You can avoid that by using “create index concurrently”.

Using that syntax writes to the table from other sessions will succeed while the index is being build. But, as clearly written in the documentation: The downside is that the table needs to be scanned twice, so more work needs to be done which means more resource usage on your server. Other points need to be considered as well. When, for whatever reason, you index build fails (e.g. by canceling the create index statement):

postgres=# create index concurrently i1 on t1(a); ^CCancel request sent ERROR: canceling statement due to user request

… you maybe would expect the index not to be there at all but this is not the case. When you try to create the index right after the canceled statement again you’ll hit this:

postgres=# create index concurrently i1 on t1(a); ERROR: relation "i1" already exists

This does not happen when you do not create the index concurrently:

postgres=# create index i1 on t1(a); ^CCancel request sent ERROR: canceling statement due to user request postgres=# create index i1 on t1(a); CREATE INDEX postgres=#

The questions is why this happens in the concurrent case but not in the “normal” case? The reason is simple: When you create an index the “normal” way the whole build is done in one transaction. Because of this the index does not exist when the transaction is aborted (the create index statement is canceled). When you build the index concurrently there are multiple transactions involved: “In a concurrent index build, the index is actually entered into the system catalogs in one transaction, then two table scans occur in two more transactions”. So in this case:

postgres=# create index concurrently i1 on t1(a); ERROR: relation "i1" already exists

… the index is already stored in the catalog:

postgres=# create index concurrently i1 on t1(a); ^CCancel request sent ERROR: canceling statement due to user request postgres=# select relname,relkind,relfilenode from pg_class where relname = 'i1'; relname | relkind | relfilenode ---------+---------+------------- i1 | i | 32926 (1 row)

If you don’t take care of that you will have invalid indexes in your database:

postgres=# \d t1

Table "public.t1"

Column | Type | Collation | Nullable | Default

--------+-----------------------+-----------+----------+---------

a | integer | | |

b | character varying(50) | | |

Indexes:

"i1" btree (a) INVALID

You might think that this does not harm, but then consider this case:

-- in session one build a unique index postgres=# create unique index concurrently i1 on t1(a); -- then in session two violate the uniqueness after some seconds postgres=# update t1 set a = 5 where a = 4000000; UPDATE 1 -- the create index statement will fail in the first session postgres=# create unique index concurrently i1 on t1(a); ERROR: duplicate key value violates unique constraint "i1" DETAIL: Key (a)=(5) already exists.

This is even worse as the index now really consumes space on disk:

postgres=# select relpages from pg_class where relname = 'i1';

relpages

----------

13713

(1 row)

The index is invalid, of course and will not be used by the planner:

postgres=# \d t1

Table "public.t1"

Column | Type | Collation | Nullable | Default

--------+-----------------------+-----------+----------+---------

a | integer | | |

b | character varying(50) | | |

Indexes:

"i1" UNIQUE, btree (a) INVALID

postgres=# explain select * from t1 where a = 12345;

QUERY PLAN

----------------------------------------------------------------------

Gather (cost=1000.00..82251.41 rows=1 width=37)

Workers Planned: 2

-> Parallel Seq Scan on t1 (cost=0.00..81251.31 rows=1 width=37)

Filter: (a = 12345)

(4 rows)

But the index is still maintained:

postgres=# select relpages from pg_class where relname = 'i1';

relpages

----------

13713

(1 row)

postgres=# insert into t1 select a.*, md5(a::varchar) from generate_series(5000001,6000000) a;

INSERT 0 1000000

postgres=# select relpages from pg_class where relname = 'i1';

relpages

----------

16454

(1 row)

So now you have an index which can not be used to speed up queries (which is bad) but the index is still maintained when you write to the table (which is even worse because you consume resources for nothing). The only way out of this is to drop and re-create the index:

postgres=# drop index i1;

DROP INDEX

-- potentially clean up any rows that violate the constraint and then

postgres=# create unique index concurrently i1 on t1(a);

CREATE INDEX

postgres=# \d t1

Table "public.t1"

Column | Type | Collation | Nullable | Default

--------+-----------------------+-----------+----------+---------

a | integer | | |

b | character varying(50) | | |

Indexes:

"i1" UNIQUE, btree (a)

postgres=# explain select * from t1 where a = 12345;

QUERY PLAN

---------------------------------------------------------------

Index Scan using i1 on t1 (cost=0.43..8.45 rows=1 width=122)

Index Cond: (a = 12345)

(2 rows)

Remember: When a create index operations fails in concurrent mode make sure that you drop the index immediately.

One more thing to keep in mind: When you create an index concurrently and there is another session already modifying the data the create index command waits until that other operation completes:

-- first session inserts data without completing the transaction postgres=# begin; BEGIN Time: 0.579 ms postgres=# insert into t1 select a.*, md5(a::varchar) from generate_series(6000001,7000000) a; INSERT 0 1000000 -- second sessions tries to build the index postgres=# create unique index concurrently i1 on t1(a);

The create index operation will wait until that completes:

postgres=# select query,state,wait_event,wait_event_type from pg_stat_activity where state ='active';

query | state | wait_event | wait_event_t

----------------------------------------------------------------------+--------+------------+-------------

create unique index concurrently i1 on t1(a); | active | virtualxid | Lock

select query,state,wait_event,wait_event_type from pg_stat_activity; | active | |

… meaning when someone forgets to end the transaction the create index command will wait forever. There is the parameter idle_in_transaction_session_timeout which gives you more control on that but still you need to be aware what is happening here.

Happy index creation 🙂

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/DWE_web-min-scaled.jpg)