Provisioning a K8s infrastructure may be performed in different ways. Terraform has a connector called the Kubernetes provider but it doesn’t allow building and deploying a Kubernetes cluster. The cluster must be up and running before using the provider. Fortunately, there are different cloud-specific provider depending which cloud provider you want to provision your cluster.

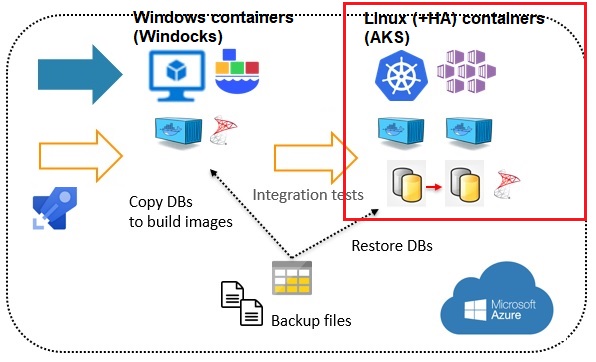

In our CI pipeline for the MSSQL DMK maintenance, we provision SQL Server containers on Linux to perform then different tests. Our K8s infrastructure is managed by Azure through AKS and the main issue we have so far is that the cluster must exist before deploying containers. In other hand, the period of testing is unpredictable and depends mainly on the team availability. The first approach was to leave the AKS cluster up and running all the time to avoid breaking the CI pipeline, but the main drawback is obviously the cost. One solution is to use Terraform provider for AKS through our DevOps Azure pipeline.

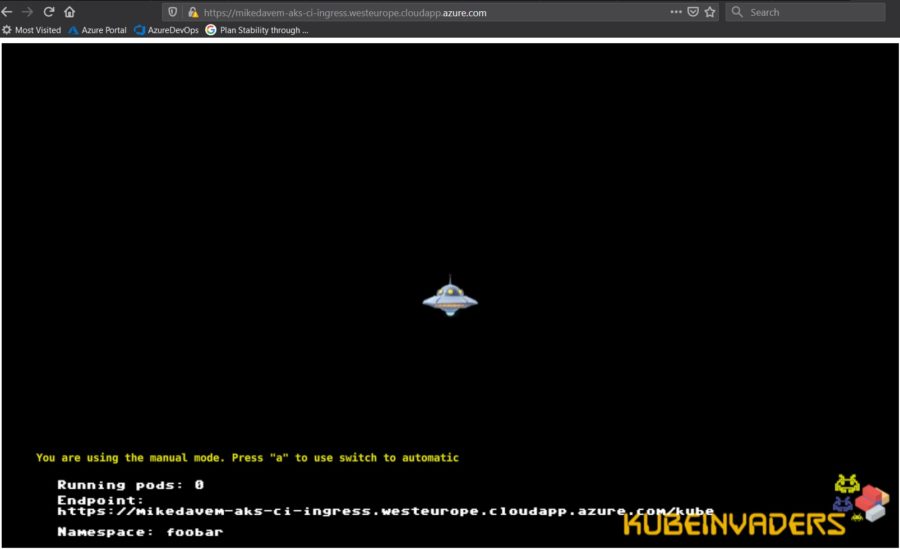

But in this blog let’s start funny by provisioning the AKS infrastructure to host the KubeInvaders project. KubeInvaders is a funny way to explain different components of K8s and I will use it during my next Workshop SQL Server on Kubernetes at SQLSaturday Lisbon on November 29-30th 2019. In addition, deploying this project on AKS requires additional components including an Ingress controller, a cert manager and issuer to connect to KubeInvaders software from outside. In turn, those components are deployed through helm charts meaning we also must install helm and tiller (if you use helm version < 3).

Before sharing my code, let’s say there is already a plenty of blogs that explains how to build a Terraform plan to deploy an AKS cluster and helm components. Therefore, I prefer to share my notes and issues I experienced during my work.

1) In my context, I already manage an another AKS cluster from my laptop and I spent some times to understand the Kubernetes provider always first tries to load a config file from a given (or default) location as stated to the Terraform documentation. As a result, I experienced some weird behaviors when computing Terraform plan with detection of some components like service accounts that are supposed to not exist yet. In fact, the provider loaded my default config file ($HOME/.kube/config) was tied to another existing AKS cluster.

2) I had to take care of module execution order to get a consistent result. Terraform module dependencies can be implicit or explicit (controlled by the depends_on clause).

3) The “local-exec” provisioner remains useful to do additional work that cannot be managed directly by a Terraform module. It was especially helpful to update some KubeInvaders files with the new fresh cluster connection info as well as the URL to reach KubeInvaders software.

4) The tiller is installing only after deploying a first helm chart

The components are deployed as follows:

- Create Azure AKS resource group

- Create AKS cluster

- Load Kubernetes provider with newly created AKS cluster credentials

- Create helm tiller service account

- Bind helm tiller service account with cluster-admin role (can be improved if production scenario)

- Save new cluster config into azure_config file for future connections from other CLI tools

- Create new namespaces for Ingress controller, cert manager and KubeInvaders

- Load helm provider with newly created AKS cluster credentials

- Deploy Ingress controller and configuration

- Deploy cert manager and cluster issuer and configuration

- Install KubeInvaders

For the cert manager and cluster issuer, in order to create an ingress controller with a static public address and TLS certificates, I referred to the Microsoft documentation .

For KuveInvaders, I just cloned the project in the same directory than my Terraform files and I used the deployment file for Kubernetes.

Here my bash command file used to save my current kubeconfig file before running the Terraform stuff:

#!/bin/bash

# Remove all K8s contextes before applying terraform

read -p "Press [Enter] to save current K8s contextes ..."

export KUBECONFIG=

if [ -f "$HOME/.kube/config" ]

then

mv $HOME/.kube/config $HOME/.kube/config.sav

fi

# Generate terraform plan

read -p "Press [Enter] to generate plan ..."

terraform plan -out out.plan

# Apply terraform plan

read -p "Press [Enter] to apply plan ..."

terraform apply out.plan

# Restore saved K8s contextes

read -p "Press [Enter] to restore default K8s contextes ..."

if [ -f "$HOME/.kube/config.sav" ]; then mv $HOME/.kube/config.sav $HOME/.kube/config; fi

# Load new context

export KUBECONFIG=./.kube/azure_config

Here my Terraform files:

- variables.tf

variable "client_id" {}

variable "client_secret" {}

variable "agent_count" {

default = 3

}

variable "ssh_public_key" {

default = "~/.ssh/id_rsa.pub"

}

variable "dns_prefix" {

default = "aksci"

}

variable cluster_name {

default = "aksci"

}

variable resource_group_name {

default = "aks-grp"

}

variable location {

default = "westeurope"

}

variable domain_name_label {

default = "xxxx-ingress"

}

variable letsencrypt_email_address {

default = "[email protected]"

}

variable letsencrypt_environment {

default = "letsencrypt-staging"

}

- K8s_main.tf

###############################################

# Create Azure Resource Group for AKS #

###############################################

resource "azurerm_resource_group" "aks-ci" {

name = "${var.resource_group_name}"

location = "${var.location}"

tags = {

Environment = "CI"

}

}

###############################################

# Create AKS cluster #

###############################################

resource "azurerm_kubernetes_cluster" "aks-ci" {

name = "${var.cluster_name}"

location = "${azurerm_resource_group.aks-ci.location}"

resource_group_name = "${azurerm_resource_group.aks-ci.name}"

dns_prefix = "${var.dns_prefix}"

kubernetes_version = "1.14.6"

role_based_access_control {

enabled = true

}

linux_profile {

admin_username = "clustadmin"

ssh_key {

key_data = "${file("${var.ssh_public_key}")}"

}

}

agent_pool_profile {

name = "agentpool"

count = "${var.agent_count}"

vm_size = "Standard_DS2_v2"

os_type = "Linux"

os_disk_size_gb = 30

}

addon_profile {

kube_dashboard {

enabled = true

}

}

service_principal {

client_id = "${var.client_id}"

client_secret = "${var.client_secret}"

}

tags = {

Environment = "CI"

}

}

###############################################

# Load Provider K8s #

###############################################

provider "kubernetes" {

host = "${azurerm_kubernetes_cluster.aks-ci.kube_config.0.host}"

client_certificate = "${base64decode(azurerm_kubernetes_cluster.aks-ci.kube_config.0.client_certificate)}"

client_key = "${base64decode(azurerm_kubernetes_cluster.aks-ci.kube_config.0.client_key)}"

cluster_ca_certificate = "${base64decode(azurerm_kubernetes_cluster.aks-ci.kube_config.0.cluster_ca_certificate)}"

alias = "aks-ci"

}

###############################################

# Create tiller service account #

###############################################

resource "kubernetes_service_account" "tiller" {

provider = "kubernetes.aks-ci"

metadata {

name = "tiller"

namespace = "kube-system"

}

automount_service_account_token = true

depends_on = [ "azurerm_kubernetes_cluster.aks-ci" ]

}

###############################################

# Create tiller cluster role binding #

###############################################

resource "kubernetes_cluster_role_binding" "tiller" {

provider = "kubernetes.aks-ci"

metadata {

name = "tiller"

}

role_ref {

kind = "ClusterRole"

name = "cluster-admin"

api_group = "rbac.authorization.k8s.io"

}

subject {

kind = "ServiceAccount"

name = "${kubernetes_service_account.tiller.metadata.0.name}"

api_group = ""

namespace = "kube-system"

}

depends_on = ["kubernetes_service_account.tiller"]

}

###############################################

# Save kube-config into azure_config file #

###############################################

resource "null_resource" "save-kube-config" {

triggers = {

config = "${azurerm_kubernetes_cluster.aks-ci.kube_config_raw}"

}

provisioner "local-exec" {

command = "mkdir -p ${path.module}/.kube && echo '${azurerm_kubernetes_cluster.aks-ci.kube_config_raw}' > ${path.module}/.kube/azure_config && chmod 0600 ${path.module}/.kube/azure_config"

}

depends_on = [ "azurerm_kubernetes_cluster.aks-ci" ]

}

###############################################

# Create Azure public IP and DNS for #

# Azure load balancer #

###############################################

resource "azurerm_public_ip" "nginx_ingress" {

name = "nginx_ingress-ip"

location = "WestEurope"

resource_group_name = "${azurerm_kubernetes_cluster.aks-ci.node_resource_group}" #"${azurerm_resource_group.aks-ci.name}"

allocation_method = "Static"

domain_name_label = "${var.domain_name_label}"

tags = {

environment = "CI"

}

depends_on = [ "azurerm_kubernetes_cluster.aks-ci" ]

}

###############################################

# Create namespace nginx_ingress #

###############################################

resource "kubernetes_namespace" "nginx_ingress" {

provider = "kubernetes.aks-ci"

metadata {

name = "ingress-basic"

}

depends_on = [ "azurerm_kubernetes_cluster.aks-ci" ]

}

###############################################

# Create namespace cert-manager #

###############################################

resource "kubernetes_namespace" "cert-manager" {

provider = "kubernetes.aks-ci"

metadata {

name = "cert-manager"

}

depends_on = [ "azurerm_kubernetes_cluster.aks-ci" ]

}

###############################################

# Create namespace kubeinvaders #

###############################################

resource "kubernetes_namespace" "kubeinvaders" {

provider = "kubernetes.aks-ci"

metadata {

name = "foobar"

}

depends_on = [ "azurerm_kubernetes_cluster.aks-ci" ]

}

###############################################

# Load Provider helm #

###############################################

provider "helm" {

install_tiller = true

service_account = "${kubernetes_service_account.tiller.metadata.0.name}"

kubernetes {

host = "${azurerm_kubernetes_cluster.aks-ci.kube_config.0.host}"

client_certificate = "${base64decode(azurerm_kubernetes_cluster.aks-ci.kube_config.0.client_certificate)}"

client_key = "${base64decode(azurerm_kubernetes_cluster.aks-ci.kube_config.0.client_key)}"

cluster_ca_certificate = "${base64decode(azurerm_kubernetes_cluster.aks-ci.kube_config.0.cluster_ca_certificate)}"

}

}

###############################################

# Load helm stable repository #

###############################################

data "helm_repository" "stable" {

name = "stable"

url = "https://kubernetes-charts.storage.googleapis.com"

}

###############################################

# Install nginx ingress controller #

###############################################

resource "helm_release" "nginx_ingress" {

name = "nginx-ingress"

repository = "${data.helm_repository.stable.metadata.0.name}"

chart = "nginx-ingress"

timeout = 2400

namespace = "${kubernetes_namespace.nginx_ingress.metadata.0.name}"

set {

name = "controller.replicaCount"

value = "1"

}

set {

name = "controller.service.loadBalancerIP"

value = "${azurerm_public_ip.nginx_ingress.ip_address}"

}

set_string {

name = "service.beta.kubernetes.io/azure-load-balancer-resource-group"

value = "${azurerm_resource_group.aks-ci.name}"

}

depends_on = [ "kubernetes_cluster_role_binding.tiller" ]

}

###############################################

# Install and configure cert_manager #

###############################################

resource "helm_release" "cert_manager" {

keyring = ""

name = "cert-manager"

chart = "stable/cert-manager"

namespace = "kube-system"

depends_on = ["helm_release.nginx_ingress"]

set {

name = "webhook.enabled"

value = "false"

}

provisioner "local-exec" {

command = "kubectl --kubeconfig=${path.module}/.kube/azure_config apply -f https://raw.githubusercontent.com/jetstack/cert-manager/release-0.6/deploy/manifests/00-crds.yaml"

}

provisioner "local-exec" {

command = "kubectl --kubeconfig=${path.module}/.kube/azure_config label namespace kube-system certmanager.k8s.io/disable-validation=\"true\" --overwrite"

}

provisioner "local-exec" {

command = "kubectl --kubeconfig=${path.module}/.kube/azure_config create -f ${path.module}/cluster_issuer/cluster-issuer.yaml"

}

}

###############################################

# Install Kubeinvaders #

###############################################

resource "null_resource" "kubeinvaders" {

triggers = {

build_number = "${timestamp()}"

config = "${azurerm_kubernetes_cluster.aks-ci.kube_config_raw}"

}

depends_on = ["helm_release.nginx_ingress","helm_release.cert_manager"]

provisioner "local-exec" {

command = "sed -i \"0,/ROUTE_HOST=.*/ROUTE_HOST=${var.domain_name_label}.westeurope.cloudapp.azure.com/\" ${path.module}/KubeInvaders/deploy_kubeinvaders.sh"

}

provisioner "local-exec" {

command = "sed \"s/||toto||/${var.domain_name_label}.westeurope.cloudapp.azure.com/\" ${path.module}/KubeInvaders/kubernetes/kubeinvaders-ingress.template > ${path.module}/KubeInvaders/kubernetes/kubeinvaders-ingress.yml"

}

provisioner "local-exec" {

command = "cd ${path.module}/KubeInvaders && ./deploy_kubeinvaders.sh"

}

}

- output.tf

output "client_key" {

value = "${azurerm_kubernetes_cluster.aks-ci.kube_config.0.client_key}"

}

output "client_certificate" {

value = "${azurerm_kubernetes_cluster.aks-ci.kube_config.0.client_certificate}"

}

output "cluster_ca_certificate" {

value = "${azurerm_kubernetes_cluster.aks-ci.kube_config.0.cluster_ca_certificate}"

}

output "cluster_username" {

value = "${azurerm_kubernetes_cluster.aks-ci.kube_config.0.username}"

}

output "cluster_password" {

value = "${azurerm_kubernetes_cluster.aks-ci.kube_config.0.password}"

}

output "kube_config" {

value = "${azurerm_kubernetes_cluster.aks-ci.kube_config_raw}"

}

output "host" {

value = "${azurerm_kubernetes_cluster.aks-ci.kube_config.0.host}"

}

After provisioning my AKS cluster through Terraform here the funny result … 🙂

Et voilà! Next time, I will continue with a write-up about provisioning an AKS cluster through DevOps Azure and terraform module.

See you!

By David Barbarin

![Thumbnail [60x60]](https://www.dbi-services.com/blog/wp-content/uploads/2022/12/microsoft-square.png)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/10/JPC_wev-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2023/03/KKE_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/OLS_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/ENB_web-min-scaled.jpg)