One of the most important and basic concept in Kubernetes is the Service exposition. How about exposing a Kubernetes Service deployed inside a cluster to outside traffic?

Let’s have a look on the different approaches to expose a Kubernetes Service outside the cluster and particularly the role of the Ingress Controller.

NodePort, Load Balancers, and Ingress Controllers

In the Kubernetes world, there are three general approaches to exposing your application.

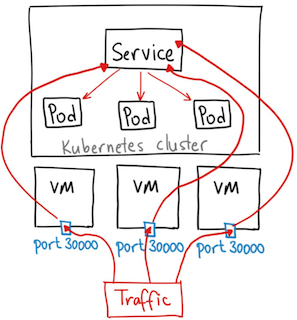

NodePort

A NodePort is an open port on every node of your cluster. Kubernetes transparently routes incoming traffic on the NodePort to your service, even if your application is running on a different node.

Source:https://medium.com/@ahmetb

But we need to consider the following points when using NodePort:

- Only possible to have 1 service per port

- Limited Ports Range 30000 to 32767

- In case of node IP address change, you need to deal with that

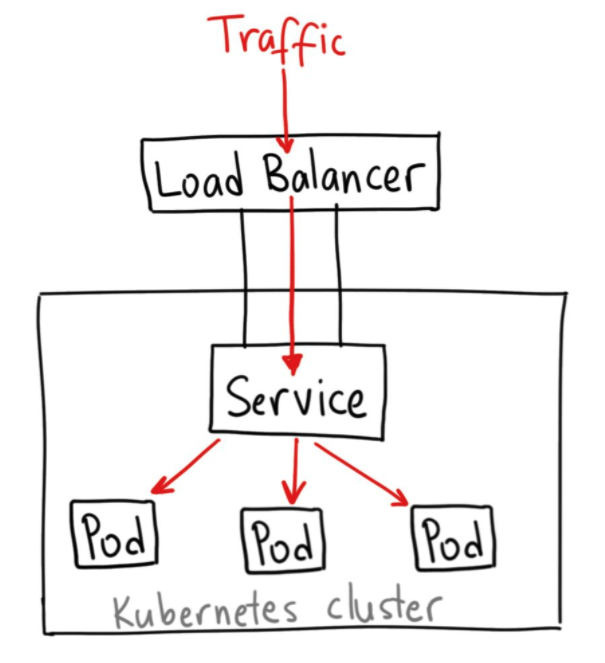

LoadBalancer

Using a LoadBalancer service type automatically deploys an external load balancer. This external load balancer is associated with a specific IP address and routes external traffic to a Kubernetes service in your cluster.

Source:https://medium.com/@ahmetb

Source:https://medium.com/@ahmetb

The advantage of using LoadBalancer service type is the direct exposition of your services, without routing, filtering, etc. This means you can send almost any kind of traffic to it, like HTTP, TCP, UDP, etc. The downside is that each service you expose with a LoadBalancer will get its own IP address, and you have to pay for a LoadBalancer per exposed service.

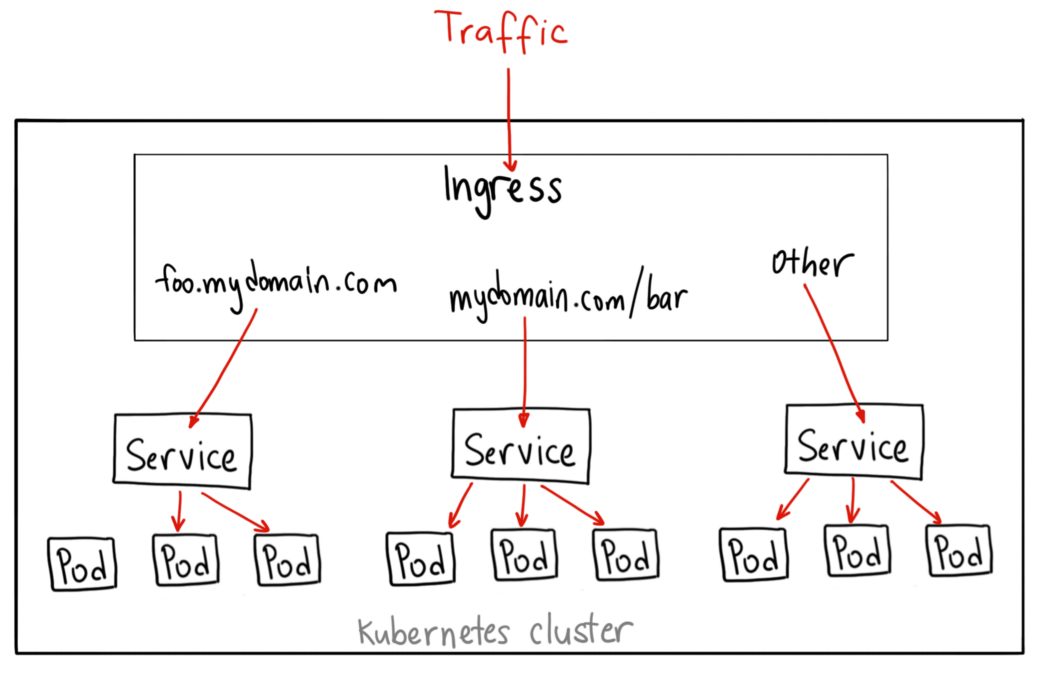

Ingress Controller

Kubernetes supports a high level abstraction called Ingress, which allows simple host or URL based HTTP(S) routing. An ingress controller is responsible for reading the Ingress Resource information and processing that data accordingly. Unlike above service, Ingress is not a type of service. It’s a smart-router acting as the entry point to the cluster for external communication.

Source:https://medium.com/@ahmetb

Source:https://medium.com/@ahmetb

Ingress is the most powerful way to expose your services and became a standard over time. Ingress is an external component and multiple Ingress Controller exists in the market. In the rest of this blog we will see how Ingress controllers work, how to deploy them, but before we have to take into account a few points.

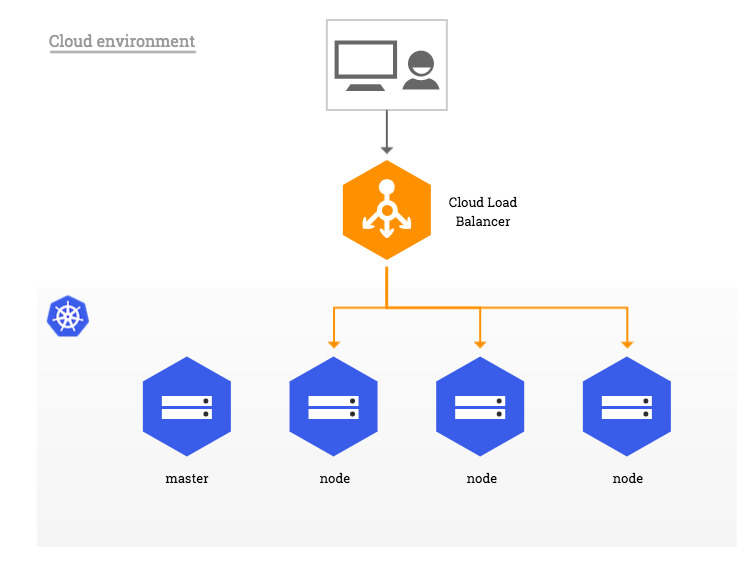

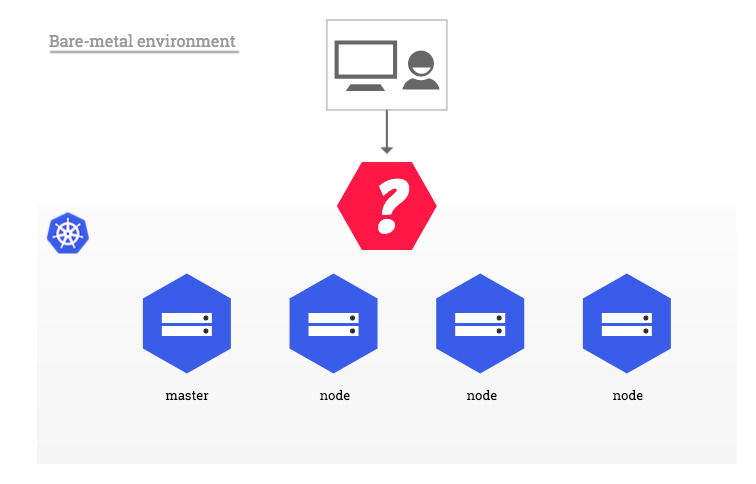

Cloud Environment vs Bare-Metal

In traditional cloud environments, network load balancers are available on-demand to become the single point of contact to the NGINX Ingress controller to external clients and, indirectly, to any application running inside the cluster.

Source:https://kubernetes.github.io/ingress-nginx/images/baremetal/cloud_overview.jpg

Basically this is a lack for bare-metal clusters. Therefore few recommended approaches exists to bypass this lack (see here: https://kubernetes.github.io/ingress-nginx/images/baremetal)

Source:https://kubernetes.github.io/ingress-nginx/images/baremetal/baremetal_overview.jpg

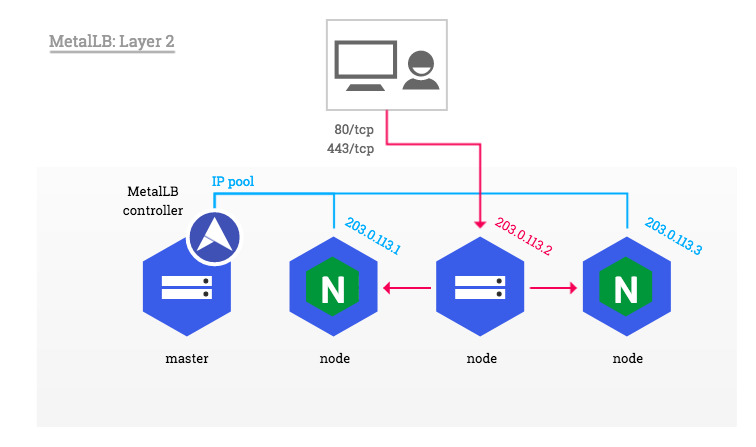

Nginx Ingress Controller combined with MetalLB

In this section we will describe how to use Nginx as an Ingress Controller for our cluster combined with MetalLB which will act as a network load-balancer for all incoming communications.

The principe is simple, we will build our deployment upon ClusterIP service and use MetalLB as a software load balancer as show below:

Source:https://kubernetes.github.io/ingress-nginx/images/baremetal/user_edge.jpg

MetalLB Installation

The official documentation can be found here: https://metallb.universe.tf/

➜ kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.9.3/manifests/namespace.yaml namespace/metallb-system created ➜ kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.9.3/manifests/metallb.yaml podsecuritypolicy.policy/controller created podsecuritypolicy.policy/speaker created serviceaccount/controller created serviceaccount/speaker created clusterrole.rbac.authorization.k8s.io/metallb-system:controller created clusterrole.rbac.authorization.k8s.io/metallb-system:speaker created role.rbac.authorization.k8s.io/config-watcher created role.rbac.authorization.k8s.io/pod-lister created clusterrolebinding.rbac.authorization.k8s.io/metallb-system:controller created clusterrolebinding.rbac.authorization.k8s.io/metallb-system:speaker created rolebinding.rbac.authorization.k8s.io/config-watcher created rolebinding.rbac.authorization.k8s.io/pod-lister created daemonset.apps/speaker created deployment.apps/controller created ➜ kubectl get all -n metal

NGINX Ingress Installation

All installation details can be found here: https://docs.nginx.com/nginx-ingress-controller/installation/installation-with-manifests/

Let’s start by cloning the GitHub repository:

➜ git clone https://github.com/nginxinc/kubernetes-ingress/ ➜ cd kubernetes-ingress/deployments ➜ git checkout v1.7.2

Create the namespace and the service account

➜ kubectl create -f common/ns-and-sa.yaml namespace/nginx-ingress created serviceaccount/nginx-ingress created

Create the cluster role and the cluster role binding:

➜ kubectl create -f rbac/rbac.yaml clusterrole.rbac.authorization.k8s.io/nginx-ingress created clusterrolebinding.rbac.authorization.k8s.io/nginx-ingress created

Create the secret with a TLS certificate for the default server (only for testing purpose):

➜ kubectl create -f common/default-server-secret.yaml

Create the default config map:

➜ kubectl create -f common/nginx-config.yaml configmap/nginx-config created

Create the CRDs for VirtualServer and VirtualServerRoute and TransportServer:

➜ kubectl create -f common/vs-definition.yaml ➜ kubectl create -f common/vsr-definition.yaml ➜ kubectl create -f common/ts-definition.yaml customresourcedefinition.apiextensions.k8s.io/virtualservers.k8s.nginx.org created customresourcedefinition.apiextensions.k8s.io/virtualserverroutes.k8s.nginx.org created customresourcedefinition.apiextensions.k8s.io/transportservers.k8s.nginx.org created

For TCP and UDP loadbalancing, create the CRD for GlobalConfiguration:

➜ kubectl create -f common/gc-definition.yaml customresourcedefinition.apiextensions.k8s.io/globalconfigurations.k8s.nginx.org created ➜ kubectl create -f common/global-configuration.yaml globalconfiguration.k8s.nginx.org/nginx-configuration created

Deploy the Ingress Controller as a Deployment to only deploy it on worker nodes

➜ kubectl create -f deployment/nginx-ingress.yaml

Check the deployment status:

➜ kubectl get deployment -o wide -n nginx-ingress NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR nginx-ingress 1/1 1 1 3m51s nginx-ingress nginx/nginx-ingress:1.7.2 app=nginx-ingress

Increase the number of replicas:

➜ kubectl scale --replicas=3 deployment/nginx-ingress -n nginx-ingress deployment.apps/nginx-ingress scaled

Check the pods deployment status now:

➜ kubectl get pods -o wide -n nginx-ingress NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-ingress-577f8dff74-69j7w 1/1 Running 0 21m 10.60.1.3 gke-dbi-test-default-pool-2f971eed-0b39 nginx-ingress-577f8dff74-gdgf9 1/1 Running 0 12s 10.60.2.5 gke-dbi-test-default-pool-2f971eed-7clq nginx-ingress-577f8dff74-nfpbd 1/1 Running 0 34m 10.60.2.4 gke-dbi-test-default-pool-2f971eed-7clq

We can observe that 2 ingress controller pods have been deployed on each worker nodes. The deployment is now completed.

External Access to Ingress Controller

To get access from outside the cluster we will create a service in LoadBalancer mode:

➜ kubectl create -f service/loadbalancer.yaml service/nginx-ingress created

Then we can track the status of the service until we get the external IP address:

➜ kubectl get svc -w -n nginx-ingress NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE nginx-ingress LoadBalancer 10.3.247.17 pending 80:32420/TCP,443:30400/TCP 33s nginx-ingress LoadBalancer 10.3.247.17 35.225.196.151 80:32420/TCP,443:30400/TCP 39s

Nginx Web Server deployment

Once the ingress controller is deployed and configured, we can deploy our application and test the external access.

➜vi nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

run: nginx

name: nginx-deploy

spec:

replicas: 1

selector:

matchLabels:

run: nginx-deploy

template:

metadata:

labels:

run: nginx-deploy

spec:

containers:

- image: nginx

name: nginx

➜ kubectl create -f nginx-deploy.yaml -n default

Then create a service ClusterIP for our deployment:

➜ kubectl expose deploy/nginx-deploy --port 80 -n default service/nginx-deploy exposed ➜ kubectl get svc -n default NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/nginx-deploy ClusterIP 10.3.240.25 80/TCP 4s

The ultime step is to create an ingress rule to route requests coming from nginx.example.com to the service nginx-deploy:

➜ vi ingress-nginx-deploy.yaml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-nginx-deploy

spec:

rules:

- host: nginx.example.com

http:

paths:

- backend:

serviceName: nginx-deploy

servicePort: 80

➜ kubectl create -f ingress-nginx-deploy.yaml

ingress.networking.k8s.io/ingress-nginx-deploy created

➜ kubectl describe ing ingress-nginx-deploy

Name: ingress-nginx-deploy

Namespace: default

Address:

Default backend: default-http-backend:80 (10.0.0.10:8080)

Rules:

Host Path Backends

---- ---- --------

nginx.example.com

nginx-deploy:80 (10.0.1.5:80)

Annotations:

Events:

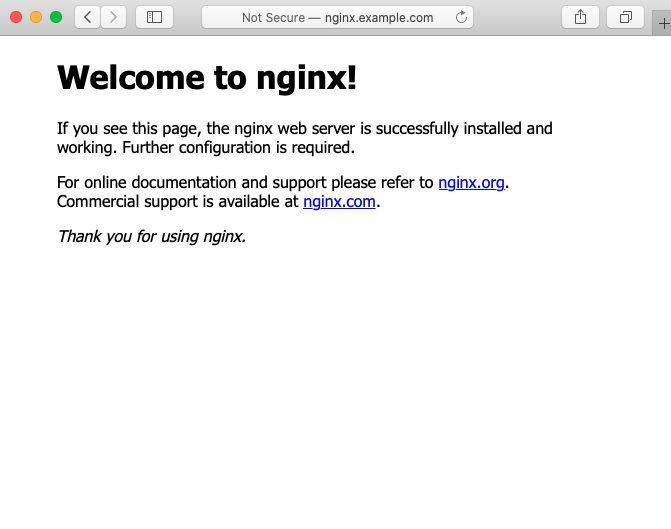

Open a web browser a test if our application is reachable from outside the cluster:

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/08/OLS_web-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2022/10/JPC_wev-min-scaled.jpg)

![Thumbnail [90x90]](https://www.dbi-services.com/blog/wp-content/uploads/2023/03/KKE_web-min-scaled.jpg)